With this tutorial we are going to conclude the series about audio analysis using FMOD library and Unreal Engine 4. This time we are going to explain how to make a simple beat tracker in real-time. This algorithm will not be an universal solution for all the songs but is an aceptable approximation taking into account the process time limitations.

Part 1: Setting up project paths

Part 2: Using the library

Part 3: Frequency spectrum

Part 4: UE4 spectrum visualizer

Part 5: Beat tracking algorithm

Part 6: UE4 beat visualizer

There are many methods available and beat onset detection is always a tradeoff between accuracy and speed. One of the papers that explains one of this methods is Beat Detection Algorithms by Frédéric Patin. The basic idea of the algorithm is to use a simple statistical model based on sound energy. We can calculate the average energy of a couple of seconds of the sound before the current playback and compare this with the current energy of the sound, if the difference of energy pass a certain threshold we can say there is a beat.

Using a window size of 1024 samples and a sampling rate of 44100 Hz, we need a buffer of 44100/1024 = 43 elements to store 1 second of history. The values of this samples can be obtained from the FFT analysis, an explanation about how to use the FFT and FMOD can be found in a previous tutorial Frequency spectrum using FMOD and UE4.

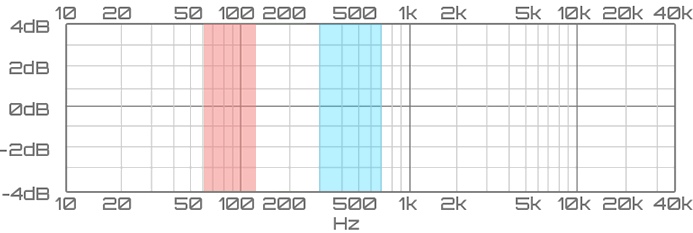

We are going to center the analysis in the first bars of the spectrum, the reason of this is to check the lower frequencies of the sound to catch the use of the kick and snare drum of a battery, one of the most frequently used instruments to track the rhythm of the song. To our experiment we are going to take a bass range of 60hz-130hz, in which we will found the kick drum, and a low midrange 301hz-750hz, where a snare drum sound can be found. The low midrange contains the low order harmonics of most instruments and is generally viewed as the bass presence range.

So we need to take the info of our sound for this ranges taking the corresponding elements of the FFT result. To obtain the frequency of each element of the FFT result we only need to calculate the frequency split (44100/1024 = 43) and multiply it by the index of the data array. So the first component store the result for the range 0-43Hz, the second 43-86Hz, the third 86-129Hz…

The algorithm

Assuming k and k+n as the limits of the actual range to process and FFT[i] as the amplitude of the frequency for the i position. We can calculate the current energy of the range as

![Rendered by QuickLaTeX.com \[ E = \frac{1}{n} \sum_{i=k}^{k+n} FFT[i] \]](https://www.parallelcube.com/web/wp-content/ql-cache/quicklatex.com-99681df86988cb4fe51cd8cf6868c800_l3.png)

We need to store this value with the next 42 samples to obtain a history (H) of 1 second.

![]()

Now the average of the band can be calculated using this history

![Rendered by QuickLaTeX.com \[ avg(H) = \frac{1}{43} \sum_{i=0}^{42} H[i] \]](https://www.parallelcube.com/web/wp-content/ql-cache/quicklatex.com-906bb4e91fe65e1463062a5c2ad54893_l3.png)

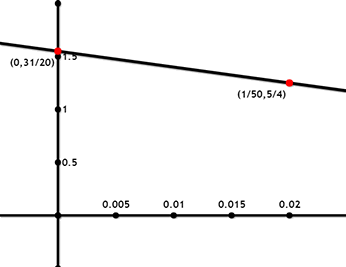

Normally a value that exceeds the average plus its half is a good threshold to detect a beat. But we can adjust this factor using the variance of the history values. On very noisy music like Hard Rock or Rock N’ Roll, the beat detection becomes a bit dodgy so we need to decrease our threshold for higher variance values.

We can define a line (Variance, Threshold) equation to represent the relation between the threshold and the variance. With (0, 1.55) (0.02, 1.25) as two of the points of this line.

![]()

![Rendered by QuickLaTeX.com \[ var(H) = \frac{1}{43} \sum_{i=0}^{42} (H[i] - avg(H))^2 \]](https://www.parallelcube.com/web/wp-content/ql-cache/quicklatex.com-d47d5b3828e9b1363714e81ba318585c_l3.png)

Our FFT results are in range 0..1 so the values of the variance are in range 0..1 too.

Finally a beat is detected if

![]()

The code

Now we can modify the C++ class SoundManager_Fmod of the previous tutorial Frequency spectrum using FMOD and UE4 to add the beat tracking algorithm.

We need to add some variables to track the history buffer, samplingFrequency and the window size, and some the auxiliar functions to calculate the average, variance and threshold values. Besides this we are going to add one function to initialize the beat detector and other one to retrieve the beat analysis result, that will be called with the Update function. To store the History data we are going to use a double-ended queue (deque) container, so we can insert new elements at beginning and remove the oldest at the end.

SoundManager_Fmod.h

public: ... void initializeBeatDetector(); void getBeat(float* spectrum, float* averageSpectrum, bool& isBass, bool& isLowM); private: ... int _windowSize; float _samplingFrequency; typedef std::deque<std::vector<float> > FFTHistoryContainer; int _FFThistory_MaxSize; std::vector<int> _beatDetector_bandLimits; FFTHistoryContainer _FFTHistory_beatDetector; static void fillAverageSpectrum(float* averageSpectrum, int numBands, const FFTHistoryContainer& fftHistory); static void fillVarianceSpectrum(float* varianceSpectrum, int numBands, const FFTHistoryContainer& fftHistory, const float* averageSpectrum); static float beatThreshold(float variance);

SoundManager_Fmod.cpp

To obtain the sampling frequency we can use the getFrequency function of our channel, so we need to add a couple of lines in the playSound function to retrieve the sampling rate and calculate the size of our history for 1 second (_samplingFrequency / _windowSize).

void SoundManager_Fmod::playSound()

{

_FFTHistory_linear.clear();

_FFTHistory_log.clear();

_system->playSound(_sound, 0, false, &_channel);

_channel->getFrequency(&_samplingFrequency);

_FFThistory_MaxSize = _samplingFrequency / _windowSize;

_channel->addDSP(0, _dsp);

_dsp->setActive(true);

}InitializeBeatDetector will be used to calculate the index of the limits for each beat range. For this example we are using two ranges, bass and low midrange, we only need the info of the array elements for the frequencies in range.

void SoundManager_Fmod::initializeBeatDetector()

{

int bandSize = _samplingFrequency / _windowSize;

_beatDetector_bandLimits.clear();

_beatDetector_bandLimits.reserve(4); // bass + lowMidRange * 2

// BASS 60 hz - 130 hz (Kick Drum)

_beatDetector_bandLimits.push_back( 60 / bandSize);

_beatDetector_bandLimits.push_back( 130 / bandSize);

// LOW MIDRANGE 301 hz - 750 hz (Snare Drum)

_beatDetector_bandLimits.push_back( 301 / bandSize);

_beatDetector_bandLimits.push_back( 750 / bandSize);

_FFTHistory_beatDetector.clear();

}The function getBeat has a similar behavior as getSpectrum_ functions but adding the beat tracking algorithm. So it calculates the FFT, take the info of specific frequencies and apply the algorithm for each range, returning the result for each range separately. After the algorithm we need to update de History buffers, removing the oldest elements if needed.

void SoundManager_Fmod::getBeat(float* spectrum, float* averageSpectrum, bool& isBass, bool& isLowM)

{

FMOD_DSP_PARAMETER_FFT* dspFFT = NULL;

FMOD_RESULT result = _dsp->getParameterData(FMOD_DSP_FFT_SPECTRUMDATA, (void **)&dspFFT, 0, 0, 0);

if (dspFFT)

{

// Only read / display half of the buffer typically for analysis

// as the 2nd half is usually the same data reversed due to the nature of the way FFT works.

int length = dspFFT->length / 2;

int numChannels = dspFFT->numchannels;

if (length > 0)

{

int numBands = _beatDetector_bandLimits.size() / 2;

for (int numBand = 0; numBand < numBands; ++numBand)

{

int bandBoundIndex = numBand * 2;

for (int indexFFT = _beatDetector_bandLimits[bandBoundIndex];

indexFFT < _beatDetector_bandLimits[bandBoundIndex + 1];

++indexFFT)

{

for (int channel = 0; channel < numChannels; ++channel)

{

spectrum[numBand] += dspFFT->spectrum[channel][indexFFT];

}

}

spectrum[numBand] /= (_beatDetector_bandLimits[bandBoundIndex + 1] - _beatDetector_bandLimits[bandBoundIndex]) * numChannels;

}

if (_FFTHistory_beatDetector.size() > 0)

{

fillAverageSpectrum(averageSpectrum, numBands, _FFTHistory_beatDetector);

std::vector<float> varianceSpectrum;

varianceSpectrum.resize(numBands);

fillVarianceSpectrum(varianceSpectrum.data(), numBands, _FFTHistory_beatDetector, averageSpectrum);

isBass = (spectrum[0] - 0.05) > beatThreshold(varianceSpectrum[0]) * averageSpectrum[0];

isLowM = (spectrum[1] - 0.005) > beatThreshold(varianceSpectrum[1]) * averageSpectrum[1];

}

std::vector<float> fftResult;

fftResult.reserve(numBands);

for (int index = 0; index < numBands; ++index)

{

fftResult.push_back(spectrum[index]);

}

if (_FFTHistory_beatDetector.size() >= _FFThistory_MaxSize)

{

_FFTHistory_beatDetector.pop_front();

}

_FFTHistory_beatDetector.push_back(fftResult);

}

}

}Finally the code for the auxiliary function to calculate the average, variance and threshold values.

void SoundManager_Fmod::fillAverageSpectrum(float* averageSpectrum, int numBands, const FFTHistoryContainer& fftHistory)

{

for (FFTHistoryContainer::const_iterator fftResult_it = fftHistory.cbegin();

fftResult_it != fftHistory.cend();

++fftResult_it)

{

const std::vector<float>& fftResult = *fftResult_it;

for (int index = 0; index < fftResult.size(); ++index)

{

averageSpectrum[index] += fftResult[index];

}

}

for (int index = 0; index < numBands; ++index)

{

averageSpectrum[index] /= (fftHistory.size());

}

}

void SoundManager_Fmod::fillVarianceSpectrum(float* varianceSpectrum, int numBands, const FFTHistoryContainer& fftHistory, const float* averageSpectrum)

{

for (FFTHistoryContainer::const_iterator fftResult_it = fftHistory.cbegin();

fftResult_it != fftHistory.cend();

++fftResult_it)

{

const std::vector<float>& fftResult = *fftResult_it;

for (int index = 0; index < fftResult.size(); ++index)

{

varianceSpectrum[index] += (fftResult[index] - averageSpectrum[index]) * (fftResult[index] - averageSpectrum[index]);

}

}

for (int index = 0; index < numBands; ++index)

{

varianceSpectrum[index] /= (fftHistory.size());

}

}

float SoundManager_Fmod::beatThreshold(float variance)

{

return -15 * variance + 1.55;

}Conclusion

This tutorial shows one of the methods used to achieve a fast and fairly accurate solution for beat detection. This method can be used when performance is much more important than precision. However, if we wished a more accurate solution we need to use a Discrete Wavelet Transform (DWT). In the paper Audio Analysis using the Discrete Wavelet Transform by George Tzanetakis, Georg Essl and Perry Cook we can found a well explained application of the DWT with a beat detection algorithm.

In the next part of this tutorial we are going to integrate the new beat tracking functionality in the previous spectrum visualizer.

Tutorial files

2020/06/22 – Updated to Unreal Engine 4.24

You may also like:

Support this blog!

For the past year I've been dedicating more of my time to the creation of tutorials, mainly about game development. If you think these posts have either helped or inspired you, please consider supporting this blog. Thank you so much for your contribution!

Hi, I don’t understand why you don’t square the FFT[i] into the summation. Shouldn’t it be FFT[i]^2 for the calculation of the energy?

Yeah, normally you need the square of the module, but since the library returns the normalized values of the FFT, it only reduce the scale of the results.

for (int numBand = 0; numBand < numBands; ++numBand)

{

for (int indexFFT = _beatDetector_bandLimits[numBand];

indexFFT < _beatDetector_bandLimits[numBand + 1];

++indexFFT)

{

for (int channel = 0; channel spectrum[channel][indexFFT];

}

}

spectrum[numBand] /= (_beatDetector_bandLimits[numBand + 1] – _beatDetector_bandLimits[numBand]) * numChannels;

}

Hi, I have a question on this point. In this cycle We compute the operation from BandLimits[0] to BandLimits[1] and from BandLimits[1] to BandLimits[2]. Why from BandLimits[1] to BandLimits[2]? I would have calculated from BandLimits[2] to BandLimits[3] not from BandLimits[1] to BandLimits[2].

Thanks

Yes, the indexes that select the band bounds are wrong. I will update the code