In a previous tutorial we explored how to embed a Python interpreter in a C++ application. Now we can do some small changes to improve their performance drastically

Introduction

Performance improvements

We can start with our calculate_cosine function

float calculate_cosine()

{

py::scoped_interpreter guard{};

py::module_ math_module = py::module_::import("math");

py::object result = math_module.attr("cos")(0.5);

return py::cast<float>(result);

}Each time we call this function an instance of the Python interpreter is initialized and the module math is loaded before cal to cos. We can put this in a class and do a small performance test

PythonInterpreter.cpp

#include "PythonInterpreter.h"

namespace py = pybind11;

PythonInterpreter::PythonInterpreter()

{

}

PythonInterpreter::~PythonInterpreter()

{

}

float PythonInterpreter::calculate_cosine()

{

py::scoped_interpreter guard{};

py::module_ math_module = py::module_::import("math");

py::object result = math_module.attr("cos")(0.5);

return py::cast<float>(result);

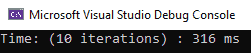

}Let’s see how much time is used with only 10 iterations. In our main.cpp we can instantiate the object of the class and add some time measure around the call of the calculate_cosine function

main.cpp

#include <iostream>

#include <chrono>

#include "PythonInterpreter.h"

using namespace std::chrono;

int main()

{

{

PythonInterpreter python_interpreter;

float dumpvalue = 0.0;

auto start = std::chrono::high_resolution_clock::now();

for (int i = 0; i < 10; ++i)

{

dumpvalue += python_interpreter.calculate_cosine();

}

auto stop = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<milliseconds>(stop - start);

std::cout << "Time: (10 iterations) : " << duration.count() << " ms" << std::endl;

}

return 0;

}

We are spending 300 ms to calculate only 10 cosines! It is much too time for only 10 small calculations

So let’s move the Python interpreter initialization to the constructor of our class, without forget to put the interpreter finalize in the class destructor too.

We want to replace the scoped_interpreter guard with initialize/finalize function and link the interpreter’s life time with the life time of out PythonInterpreter class. So when the object class is instantiated the interpreter is initialized and when the object is destroyed the interpreter is finalized

PythonInterpreter.cpp

#include "PythonInterpreter.h"

namespace py = pybind11;

PythonInterpreter::PythonInterpreter()

{

py::initialize_interpreter();

}

PythonInterpreter::~PythonInterpreter()

{

py::finalize_interpreter();

}

float PythonInterpreter::calculate_cosine()

{

py::module_ math_module = py::module_::import("math");

py::object result = math_module.attr("cos")(0.5);

return py::cast<float>(result);

}With that change when the calculate_cosine is called the interpreter is already initialized

We can see how much is needed to initialize out interpreter checking the time used by the class constructor

main.cpp

#include <iostream>

#include <chrono>

#include "PythonInterpreter.h"

using namespace std::chrono;

int main()

{

{

auto start = std::chrono::high_resolution_clock::now();

PythonInterpreter python_interpreter;

auto stop = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<milliseconds>(stop - start);

std::cout << "Initialize interpreter: " << duration.count() << " ms" << std::endl;

float dumpvalue = 0.0;

start = std::chrono::high_resolution_clock::now();

for (int i = 0; i < 10; ++i)

{

dumpvalue += python_interpreter.calculate_cosine();

}

stop = std::chrono::high_resolution_clock::now();

duration = std::chrono::duration_cast<milliseconds>(stop - start);

std::cout << "Time (10 iterations) : " << duration.count() << " ms" << std::endl;

}

return 0;

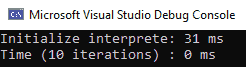

}We can see that the time used to initialize the interpreter is near 31ms, and the time to execute 10 cosine calculation is about microseconds. That explains the previous results of 316ms ~= 310ms = (31ms + 0ms) * 10

And now the time to execute all the program is about 31ms!

Conclusion

With this tutorial we have seen how we need to manage an embedded Python interpreter in a C++ application to increase the performance when Python code is called. It’s something very simple but it can be overlooked, especially in our first time embedding a Python interpreter

You may also like:

Support this blog!

For the past year I've been dedicating more of my time to the creation of tutorials, mainly about game development. If you think these posts have either helped or inspired you, please consider supporting this blog. Thank you so much for your contribution!